Hello everybody

I’d like to discuss with you on a problem I’m facing these days, developing for Rack, which manage polyphony in its own way.

Coming from the VST developing world, when my plugin receive midi notes (i.e. gates in Rack), usually I manage them using an internal buffer (theoretically with infinite slots), which use a new voice if some old ones are still processing (such as releasing).

On Rack the situation is a bit different. I’m doing a sort of synth/sampler module, which will take an input poly cable (up to 16 channels, that send gates signals) and outputs a poly cable with the corresponding amount of channels, where each voice index match the input one. I see many modules works like that in Rack, so each voice can be stacked later with additional fxs (poly filter/adsr/etc).

Now internally, for each voice, when I receive a gate off, the module apply a sort of “smooth” to the processed signal (~2 or 3ms), removing clicks and artefacts when the voice stop.

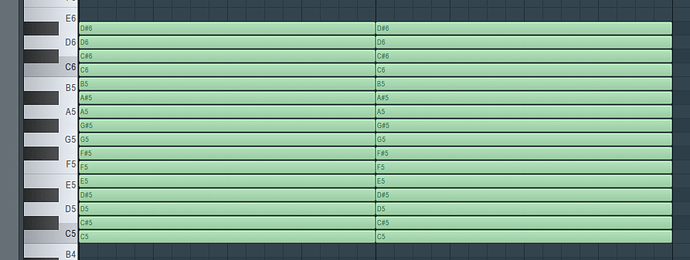

The problem is when I link this module to an input seq. What if that seq (which I’ve also made) send adjacent gates? Such as: channel 1: gate on -> gate off -> wait 1ms -> gate on -> gate off -> and so on… channel 2: gate on -> gate off -> wait 1ms -> gate on -> gate off -> and so on… … channel 16: gate on -> gate off -> wait 1ms -> gate on -> gate off -> and so on…

When the synth module will get the gate off, it will start smoothing… but the smooting will result longer than the next gate on, “overlapping” it.

Three ways to resolve this I’ve in mind, which unfortunately have problems:

- delay the incoming gate on till the smooth of prev gate is finished - problems: delaying the voice of another 1/2 ms would create phase effects. 1ms is good, but 2 or 3 ms seems bad

- blend (within an internal buffer) the smoothing of the prev note with the new one, on the same voice/channel - problems: in the stacked chain, the other modules will process both prev and new signals together (they are blend), which can create bad artefacts (thing for example a compressor, that can be triggered differently due to the content of prev + new source).

- every time I receive a new gate, I internally use a “free” voice, so, if voice index 1 can be moved to voice index 7 if that’s free and not releasing, keeeping each signal run separately and without delay - problems: this will works only receiving up to 8 adjacent notes, because if I use 16 adjacent notes, when I gate off them all, all of them are busy and releasing, so the plug will sound nothing, just releasing.

Can’t see any other alternatives, but probably you did

How would you manage this situation? Thanks