Hi everyone! I’ve been working on a module that uses a ring buffer for recording continuous audio. I wanted to share a technique that I used to solve an issue when reading audio from the buffer close to the write head.

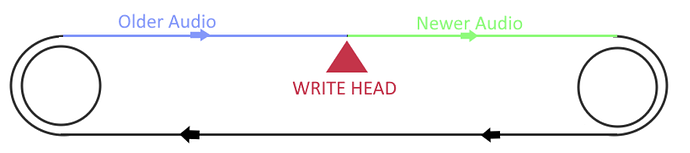

Imagine it like a spool of audio tape, like an old tape echo:

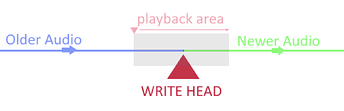

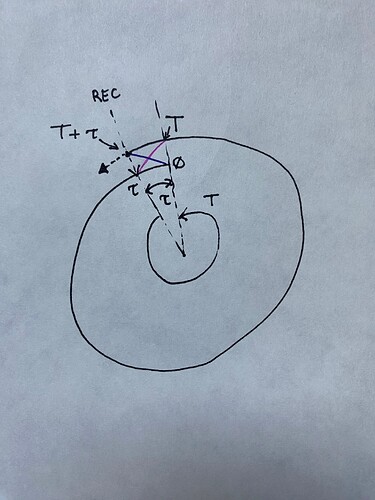

The issue arises when you attempt to play a section of audio that contains both older audio and newer audio, like this:

When the read head reaches the write head, there’s a discontinuity between the older (previously recorded) audio and the newer audio which can cause an audible “pop”.

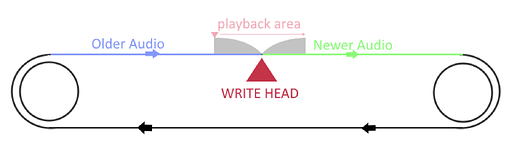

When discussing options with AI, it keep urging me to “crossfade” between the two, but I don’t think that’s actually possible or logical.

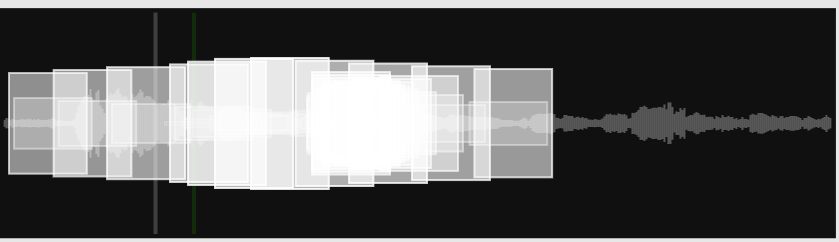

Instead, I’m using a fast fade-out when the read head (aka. the playback position) approaches the write head, and then fading-in after it passes the write head.

I’m just sharing in case anyone runs into a similar situation. And I’m all ears if anyone has a better approach. ![]()

Here’s pseudocode for my implementation:

// --- Assumed Interfaces for Dependencies ---

INTERFACE AudioBuffer

// Gets the total number of samples the buffer can hold

FUNCTION getSize() -> INTEGER

// Gets the current write position (like a playhead) in the buffer

FUNCTION getWritePosition() -> INTEGER

// Gets the raw left channel sample at a specific integer index

FUNCTION getSampleLeft(index: INTEGER) -> FLOAT

// Gets the raw right channel sample at a specific integer index

FUNCTION getSampleRight(index: INTEGER) -> FLOAT

END INTERFACE

ENUM InterpolationMethod

LINEAR, CUBIC, SINC // etc.

END ENUM

INTERFACE SampleInterpolator

// Sets the interpolation algorithm to use

PROCEDURE setMethod(method: InterpolationMethod)

// Calculates an interpolated sample value at a fractional read position.

// It uses 'sampleSourceFunc' to get raw sample values at integer indices.

// 'bufferSize' is provided for context (e.g., boundary handling).

FUNCTION interpolate(readPosition: FLOAT, sampleSourceFunc: FUNCTION(INTEGER) -> FLOAT, bufferSize: INTEGER) -> FLOAT

END INTERFACE

// Structure to hold a stereo sample pair

STRUCTURE StereoSample

left: FLOAT

right: FLOAT

END STRUCTURE

// --- Pseudocode for FadingBufferReader ---

CLASS FadingBufferReader

// --- Member Variables ---

PRIVATE buffer: REFERENCE to AudioBuffer // The audio buffer being read from

PRIVATE fadeZoneWidth: INTEGER // The number of samples around the write head to fade

PRIVATE interpolator: SampleInterpolator // Object to handle fractional sample interpolation

// --- Constructor ---

// Initializes the reader with a buffer and an optional fade zone width.

CONSTRUCTOR FadingBufferReader(inputBuffer: REFERENCE to AudioBuffer, initialFadeWidth: INTEGER DEFAULT 1000)

SET this.buffer = inputBuffer

// Ensure fade width is at least 1 to avoid division by zero later

SET this.fadeZoneWidth = MAX(1, initialFadeWidth)

// Create or initialize the interpolator, defaulting to Linear

CREATE this.interpolator

this.interpolator.setMethod(InterpolationMethod.LINEAR)

END CONSTRUCTOR

// --- Public Methods ---

// Gets an interpolated mono sample at the given fractional read position,

// applying fading near the write head.

FUNCTION getSample(readPosition: FLOAT) -> FLOAT

// Define a helper function that retrieves a raw *faded* mono sample

// at an integer index. This is what the interpolator will call.

DEFINE FUNCTION getFadedMonoSampleAtIndex(index: INTEGER) -> FLOAT

rawLeft = this.buffer.getSampleLeft(index)

rawRight = this.buffer.getSampleRight(index)

monoSample = 0.5 * (rawLeft + rawRight)

RETURN this.applyFadingIfNeeded(index, monoSample)

END FUNCTION

// Use the interpolator to get the final value

bufferSize = this.buffer.getSize()

RETURN this.interpolator.interpolate(readPosition, getFadedMonoSampleAtIndex, bufferSize)

END FUNCTION

// Gets an interpolated stereo sample pair at the given fractional read position,

// applying fading near the write head to both channels independently.

FUNCTION getSampleStereo(readPosition: FLOAT) -> StereoSample

// Define helper function for the left channel

DEFINE FUNCTION getFadedLeftSampleAtIndex(index: INTEGER) -> FLOAT

rawLeft = this.buffer.getSampleLeft(index)

RETURN this.applyFadingIfNeeded(index, rawLeft)

END FUNCTION

// Define helper function for the right channel

DEFINE FUNCTION getFadedRightSampleAtIndex(index: INTEGER) -> FLOAT

rawRight = this.buffer.getSampleRight(index)

RETURN this.applyFadingIfNeeded(index, rawRight)

END FUNCTION

// Use the interpolator separately for each channel

bufferSize = this.buffer.getSize()

interpolatedLeft = this.interpolator.interpolate(readPosition, getFadedLeftSampleAtIndex, bufferSize)

interpolatedRight = this.interpolator.interpolate(readPosition, getFadedRightSampleAtIndex, bufferSize)

// Combine into a stereo sample structure

RETURN CREATE StereoSample(left = interpolatedLeft, right = interpolatedRight)

END FUNCTION

// Updates the width of the fade zone.

PROCEDURE setFadeZoneWidth(width: INTEGER)

// Ensure fade width is at least 1

this.fadeZoneWidth = MAX(1, width)

END PROCEDURE

// Changes the interpolation method used.

PROCEDURE setInterpolationMethod(method: InterpolationMethod)

this.interpolator.setMethod(method)

END PROCEDURE

// Allows access to the underlying buffer object.

FUNCTION getBuffer() -> REFERENCE to AudioBuffer

RETURN this.buffer

END FUNCTION

// --- Private Helper Methods ---

// Calculates and applies a fade multiplier to a sample if its read position

// is close to the buffer's write position.

PRIVATE FUNCTION applyFadingIfNeeded(readIndex: INTEGER, sampleValue: FLOAT) -> FLOAT

writePos = this.buffer.getWritePosition()

bufferSize = this.buffer.getSize()

// Handle empty buffer case

IF bufferSize == 0 THEN

RETURN 0.0

END IF

// Calculate the minimum distance between the read index and write position,

// accounting for buffer wrap-around.

diff = writePos - readIndex

directDistance = ABSOLUTE_VALUE(diff)

wrapAroundDistance = bufferSize - directDistance

distance = MIN(directDistance, wrapAroundDistance)

// If the distance is greater than the fade zone, no fading is needed.

IF distance > this.fadeZoneWidth THEN

RETURN sampleValue

END IF

// Calculate a fade factor between 0.0 and 1.0.

// Factor is 0.0 at the write head (distance=0)

// Factor is 1.0 at the edge of the fade zone (distance=fadeZoneWidth)

fadeFactor = CAST_TO_FLOAT(distance) / CAST_TO_FLOAT(this.fadeZoneWidth)

// Ensure factor is clamped between 0 and 1 (optional, but good practice)

fadeFactor = CLAMP(fadeFactor, 0.0, 1.0)

// Apply a smoothing curve (cosine half-wave) for a smoother fade-in/out

// This maps the linear fadeFactor [0, 1] to a smooth curve [0, 1]

DEFINE PI = 3.14159...

smoothFactor = (1.0 - COS(fadeFactor * PI)) * 0.5

// Apply the fade multiplier to the sample

RETURN sampleValue * smoothFactor

END FUNCTION

END CLASS

If you want more information about the process, let me know!

[ Updated by removing the AI generated explaination of that code. ]