You’re all a knowledgeable bunch, so I thought I’d run by you a half-baked idea I’m interested in building (and, if it works out, releasing as a set of DIY instructions + custom VCV module) this summer. I’m sure you will have useful input/prior art/etc. to share.

I’m interested in making more visually interesting live virtual synth performances, and I thought that using a physical matrix mixer would be more engaging than patching virtual cables on a screen.

If you’re not sure what I mean by “matrix mixer”, refer to this picture of the EMS VCS3 routing matrix, using pins to route inputs to outputs:

Obviously, such a 16x16 mod matrix could be replaced with a midi grid of lit MPC-style pads. I could make a grid of 4 boring 8x8 Novation Launchpads every single artist already uses for a mere €500. Or build my own out of cheap electronics for a mere €100.

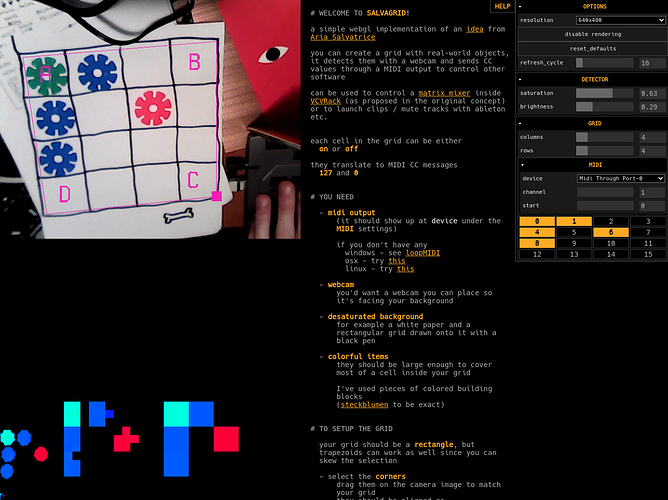

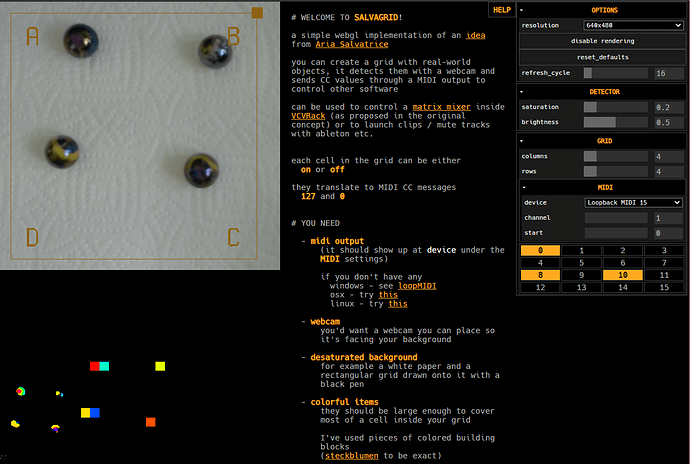

But I was thinking of something more unique: marbles + computer vision.

Think Chinese Checkers:

I bought about €20 worth of 16mm marbles. I picked high quality stuff! Various colors and textures. All of them opaque. I want then to look as cool as possible in videos.

And I bought €6 worth of webcams. Plural. I bought two of them, 3 euros each, shipping included. I bought the most worthless junk possible. The cheapest garbage Aliexpress was willing to sell me. I 100% expect to get exactly the level of quality I paid for. If I get the project to work with those cams, it means I can trust it will work with any cam.

So, I have only minimal experience with this stuff, but here’s my thinking how I’ll go about this. This is where you come in, by talking me out of bad ideas I might have so I don’t waste my time pursuing them.

- Build a cardboard prototype, with only a handful of holes, place a good webcam inside, rather than above the box, a light above the box, and try to write opencv code that detects those holes in realtime. Probably gonna use the python bindings it has.

- Once it works, experiment with filming the box from above instead.

- Decide whether to go for a setup where the control surface is filmed from inside or from above the box (more reliable vs. more compact buld)

- Design an elegant but easy to build controller with something like Blender (which I am familiar with, easier than learning a legit CAD thingie).

- Craft it using a material that’s easy to work with such as MDF, use a router to make the pits, and at the center of the pit, drill a hole for light to seep through. Add larger pits for the spare marbles, like on the image above. Note that I don’t have access to CNC gear, and I want it to be a DIY project other people can reproduce on a <€100 budget anyway.

- Add a camera inside/above the box, and maybe a USB light above it too.

- Adapt the prototype code to that build, and make it output MIDI CC on two MIDI channels. Tweak things until it works satisfactorily with the cheapo low quality webcams.

- Rig it to Bogaudio SWITCH1616.

- Try to get it to work on OSX and Linux.

- Make my own VCV module that mashes up MIDI-CC and a mixer matrix.

- Distribute build instructions, OpenCV <=> MIDI bridge (loopback driver required), custom VCV module.

- Record a tutorial video explaining how to make your own.

Feedback more than welcome! And hopefully, progress updates from me every so often.