I’ve been working on a product for Reason (Started with V9) but never released it. It’s using reasons remote codec and reasons feature to export all remotable items (knobs, matrix diplays … and whatever UI you can interact with) into a CSV kind of file. I’ve been parsing this and creating a DSL (domain specific language) to interact with Reasons remote codec for controlling the devices over MIDI and receiving MIDI input. You’ll find some videos over here:

I’ve been able to create UIs in reason and wire in the script. The scripting is external as Jupyter Notebook and sends/receives midi messages. I would totally be willing to work in something like this and releasing it for Rack. Does Rack or the extension offer some model to describe what the buttons are called? What I’d like to see in Rack is a way being able to uniquely address a module by an ID or path (similar to OSC, or exactly with OSC). Something like an API I can call. Which could be midi, and then yet on top something that can list the properties of a module and all the modules loaded.

I’ve also been thinking about releasing my work as OpenSource (I already got the agreement from Propellerheads … sorry now Reasonstudios, that releasing as opensource is fine for my python based remote codec), but never did. Mainly because I haven’t been clear wether I want to monetise on this and because I wasn’t satisfied with the code quality.

@Vortico is it possible to have a RPC or API that can list devices. Or a websocket, or some other low latency publish subscribe when modules get created/deleted. Or and endpoint returning a JSON description of how the module looks like.

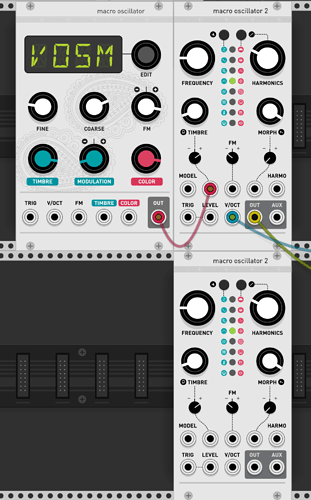

My idea is something like this:

Then I could access the two macro oscillators like

/myprojectname/macro-oscillator-2/1/frequency

my python script (after some setup and initialization) would look like this:

m1 = vcvrack.projects.myprojectname.macro_oscillator_2[1]

m2 = vcvrack.projects.myprojectname.macro_oscillator_2[1]

m1.frequency = kHz(32.103)

# or when using midi:

m1.frequency = 103 # whatever value is appropriate

I’ll dive into the code when I have some time or wait wether some of the devs here on the board can give me the proper answer. Would love to contribute something back to VCV.

To be clear: I can totally use the existing midi approach, but I would be a lot of setup work upfront. May there is a good approach or already existing one, that declares in a yml, json, xml or whatever structure that is parseable how a modul looks like.