Many Rack users have asked about the specifics of polyphony in Rack v1. Here’s my description of the exact behavior of poly cables and poly-supporting modules. Plugin developers should read this thread (or the Voltage Standards section of the manual after Rack v1 is released) before adding polyphony support to their modules.

Core MIDI-CV will have a “channels” mode in its context-menu that ranges from “mono” to 16. If a number N is selected (between 2-16), the CV and gate outputs will always carry N channels regardless of how many notes are currently held on your keyboard. If none are held, you’ll have N gate channels at 0V. If 3 are held, 3 of the N channels will be 10V. You can choose from a few polyphony modes to determine which 3 of the N channels are assigned the notes (Reset, Rotate, Reuse, Reassign, etc).

Fundamental VCO will accept poly cables in its 1V/oct input. When you patch a cable from MIDI-CV’s CV output into this input, the VCO will run N oscillator engines (which will likely consume significantly less CPU than N copies of Fundamental VCO), and its SIN, TRI, SAW, and SQR outputs will turn into N-channel outputs. Plugging any cable into a polyphonic output will turn it into an N-channel cable.

Fundamental VCF will accept polyphonic cables too, in its audio input and FREQ input (and RES and DRIVE). If an N-channel cable is patched into the audio input, the VCF will run N filter engines. If an N-channel cable is then patched into the FREQ input, each filter engine will adjust its frequency cutoff based on the associated channel in the FREQ input. This allows the VCF to be controlled by a polyphonic ADSR envelope generator using the polyphonic gates from Core MIDI-CV, to produce the famous behavior of analog polysynth keyboards. If, however, a monophonic cable is patched into the FREQ input, the same cutoff will be used across all N filter engines. Finally, if an M-channel cable is patched into FREQ that is short of its number of channels compared to the audio input (i.e. M<N), the first M filter engines will use their associated channel, and all higher-numbered channels will use channel 1 of the FREQ input. This is how all modules with multiple poly inputs should behave when a mix of different-channel cables are used.

The appearance of polyphonic cables will be rendered differently than normal (monophonic) cables. Currently we’ve decided to draw them thicker, perhaps with a number on them to display the number of channels they carry. The plug LED will be blue instead of green/red, representing the total electrical power carried by the cable. (The exact formula will be the RMS \sqrt{\sum_n V_n^2} if you’re interested.) A word of enlightenment: It is actually true that all Rack v1 cables will be polyphonic. What I’m calling monophonic/normal cables are actually polyphonic cables with 1 channel. They are implemented as one-and-the-same.

FAQ

What if you plug a poly cable, coming from a poly output, into a non-poly (mono) input? The mono input should simply use the first channel of the cable.

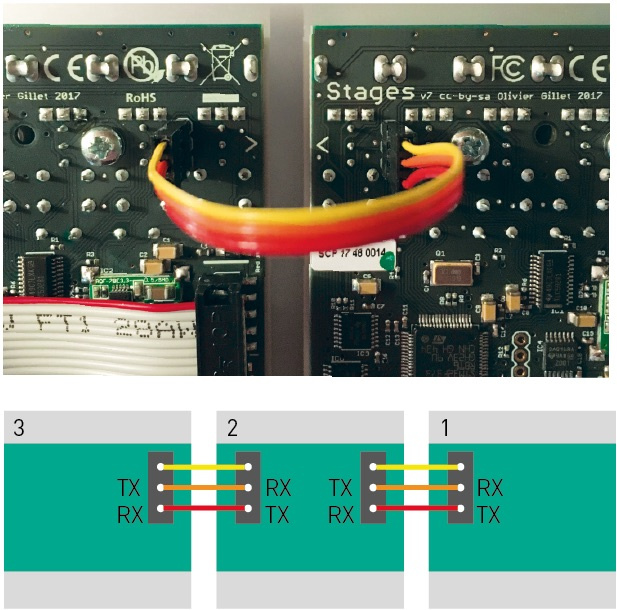

Can poly cables carry a collection of signals that are unrelated to polyphonic voices? Yes. Even though they are called “polyphonic cables”, they can be used for surround sound signals, multiplexing modules similar to the Doepfer A-180-9, or for allowing communication between modules and their expanders (A famous example is Make Noise Brains and Pressure Points).

Can you have a 0-channel cable? Yes, there are a few exceptional cases where this is desired, e.g when the number of channels is dynamic. However, it is required that the first channel must be set to some sane default, such as 0V, to ensure compatibility with mono inputs that the output might be connected to.